These early experiences with my head in an Oculus Go have left me wanting more. All of these are linear. That is, they are not interactive other than the ability to look around. They are all good introductions to 360° experiences. But leave you after a while wanting or needing more. There will be creative pressure on producers to come up with something different or have compelling story, as binocular 3D and 360° look around alone only hold gimmick value for a short time. After which there needs to be enough motivation to bother sticking your head in a VR headset.

The Missed Spaceflight

https://www.oculus.com/experiences/gear-vr/1231174300328686/

I hadn’t set up parental controls on the device, and so was careful to set up the start of the experience before handing over the headset. In my opinion, the easiest VR experience to share for any family, young and old. That’s a coverage from 10 through to 75 years and in each case it was their first experience with Oculus Go, and a very positive one.

I did find that the text titles at the start are placed too close to the viewpoint, making them unreadable. A few seconds later they are gone and the experience commences with superbly detailed rendering, audio and movement. A very nice, smooth, impressive introductory 360° VR experience.

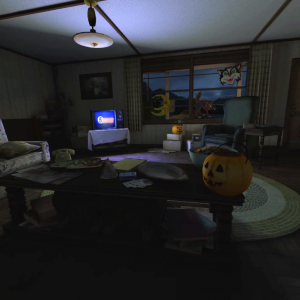

Face your fears – Stranger Things

https://www.oculus.com/experiences/gear-vr/1168200286607832/

A very nice example of a 360° rendered experience. There is no interaction, but the scenes are instantly recognisable to fans of the programme and coupled with the sound scaping there are plenty of jump scares. A certain member of my family who is a fan of Stranger Things didn’t sit through the whole of the experience for it being ‘too scary’.

Lego batman

https://samsungvr.com/view/sDrBlQe0peI

SamsungVR is one of the many media player apps designed to deliver 360° VR video. The Lego batman is a neat, entertaining and humorous experience with some excellent attention to detail.

Blade Runner 2049: Replicant Pursuit

https://www.oculus.com/experiences/go/1558723417494666/

Another very cool, short, simple 360° linear experience. There is initial interaction, to set the sound level, and decide on subtitles on or off. Subtitles are rendered when needed on a panel that follows head movement. Unusually the interaction is made not by the laser pointer, but by looking at the button to press and pressing the Go’s track pad. The 3D rendered environment is very clearly computer generated but the movement is super smooth, purposeful and not rushed. The rain is a nice touch. The sound scape brings you in, I found myself returning to watch the film again after this experience.

Summing up

I enjoyed this initial dip into VR and appreciate the huge amount of work that has gone into these to get the attention to detail and feel of the experiences right for each franchise. I’d happily consume more of the same. They do leave me craving more interaction. In fact, while these are all free to view, I would want something more when it comes to paid content. Technically I am assuming the main content of each are pre-rendered with elements such as the subtitles in the blade runner example laid over the running video. I am looking forward to playing with the tools used to create some aspects of these and delving into the production processes.